Decoding-based dimensionality reduction (dDR)

dDR is a supervised dimensionality reduction method that we developed during my PhD to perform robust decoding analysis from finite data samples.

Background

One very common approach in systems neuroscience is decoding analysis. As researchers, we often ask: given neural activity alone, can we decode what the experimental condition was at any given time. The goal of this approach is typically to assign a functional label to a given neuron, group of neurons, or region of the brain. That is, if we observe that the activity of neurons in a particular region is informative about the visual environment, we can assign a “visual” label to this region. Over the years, this approach has helped researchers map out functional properties of a wide swath of brain regions.

Importantly, however, most decoding methods assume that both the first-order (mean) and second-order (variance) statistics of the data can be estimated reliably. In practice, this is not always a safe assumption, particularly today. Thanks to advances in neural recording technologies, the dimensionality of neural datasets is rapidly increasing while experimental and behavioral constraints continue to limit the amount of data (observations) that can be collected in an experiment. As a result, second-order statistics, like the activity correlations between pairs of neurons, cannot be estimated reliably in most datasets.

As a part of my PhD, I worked on developing a new, simple dimensionality reduction method which takes advantage of low-dimensional structure in neural population data to enable reliable estimation of decoding accuracy. This project was carried out under the supervision of my PhD advisor, Dr. Stephen David. Here, I will give a high level overview of the project targeted at a general, data science audience familiar with linear algebra - I will illustrate the problem, how we solved it, and demonstrate its application using example neural data. For a more in-depth, technical discussion of the method and its applications, please refer to our full manuscript.

If you are interested in trying the method with your own data, instructions for downloading the dDR package are available at: https://github.com/crheller/dDR. A demo notebook is also included in this repository which demonstrates the standard use of the method. It also contains two example extensions of the method, which are discussed in our manuscript.

Outline

What is decoding?

Decoding analysis determines how well different conditions can be discriminated on the basis of the data collected in each respective condition. To make this explanation more concrete, I will continue to focus on neuroscience data here, but keep in mind that this idea can be extended to many types of data. In a typical systems neuroscience experiment, we record brain activity from subjects while presenting sensory stimuli to study how the brain represents this information, transforms it, and uses it to guide behavior.

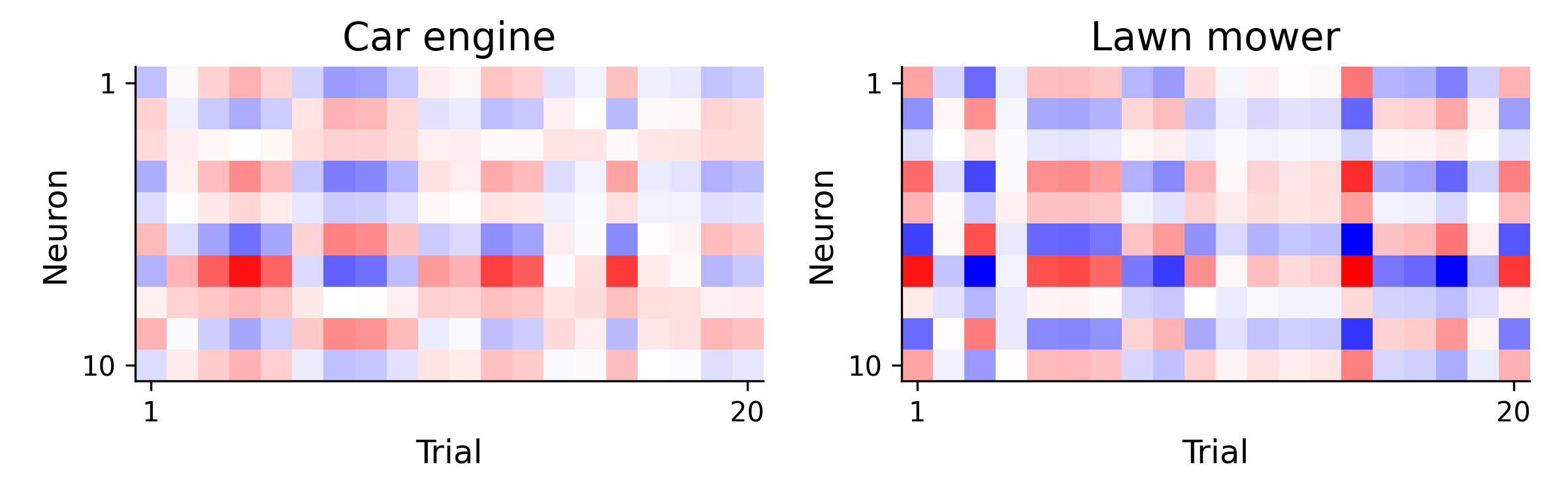

Let’s consider a simple experiment. Imagine that we want to know how the brain distinguishes between two different sounds - a car engine and a lawn mower. To study this, we measure the brain activity from a subject across many repeated presentations of each of these sounds. We call these presentations “trials.”

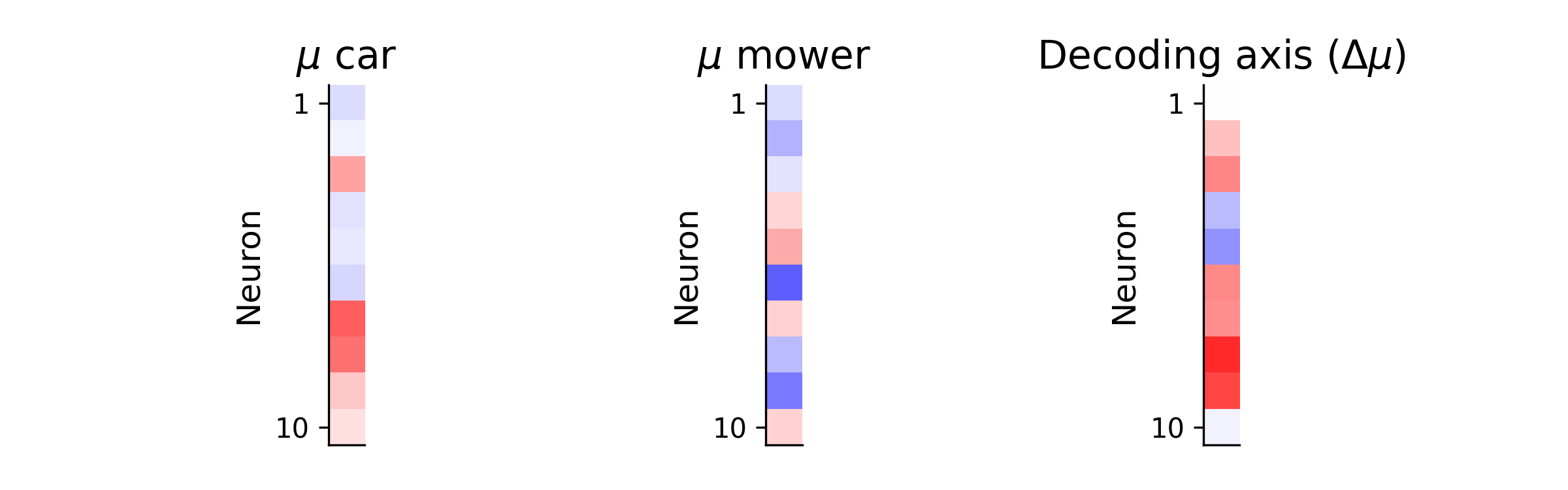

The goal of decoding is to use the neural activity shown above to determine which dimenions of the neural activity are most informative about the sound identity. The most simple way to do this is by computing the difference in the mean activity across trials between conditions.

The difference in mean activity between conditions can be thought of as a “decoding axis” (\(\Delta\mu\)). Geometrically, this is the axis in the state-space of neural activity along which the mean neural activity is most different between car and lawn mower. We can visualize this by projecting the single-trial neural activities (\(X_{car}\) and \(X_{mower}\)) onto this axis:

\[r_{car} = X_{car}\Delta\mu^{T}\] \[r_{mower} = X_{mower}\Delta\mu^{T}\]and plotting the resulting distributions for each sound:

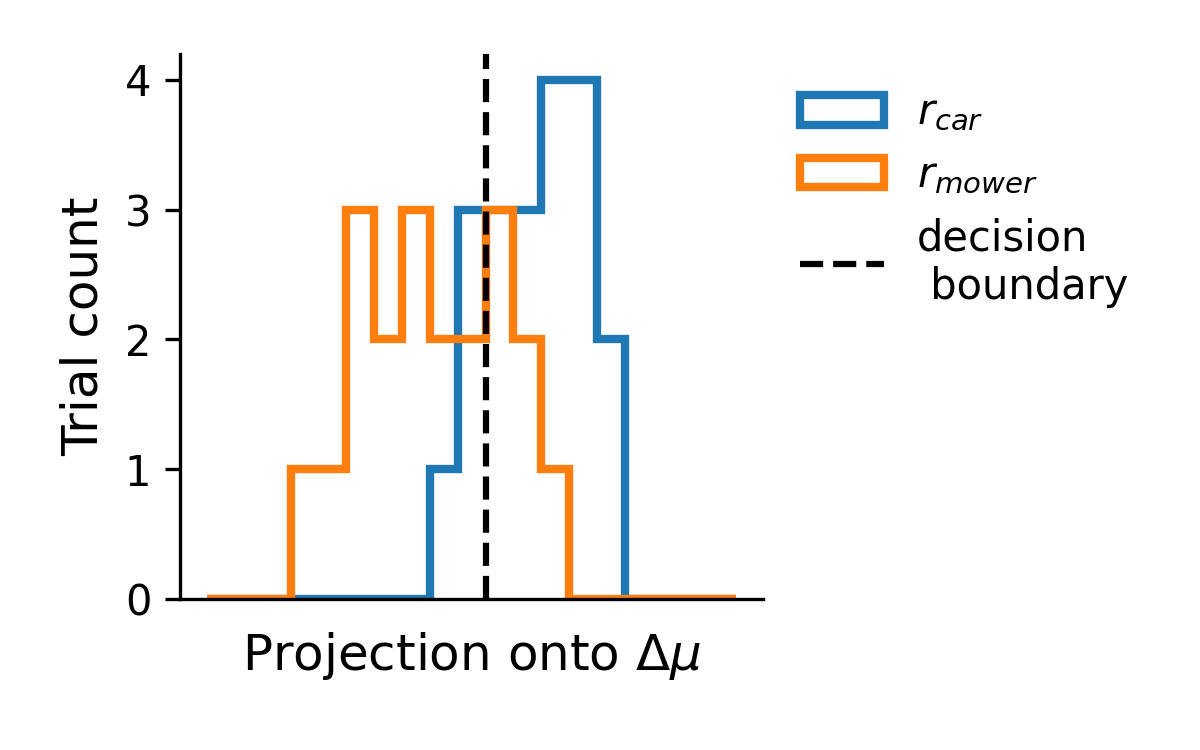

As we can see, in this one dimensional readout of the neural activity the car and mower distributions are fairly well separated. As a final step, we can quantify the decoding performance. For example, one method for quantification would be to draw a “decision boundary,” as shown above, and determine the percentage of trials on which the sound identify was correctly identified based on the neural activity. In the following sections, however, I will use a metric called \(d'\), based on signal detection theory, which can be thought of as the z-scored difference in the response to the two stimuli:

\[d' = \| z[r_{car}] - z[r_{mower}] \|\]Motivation for dDR

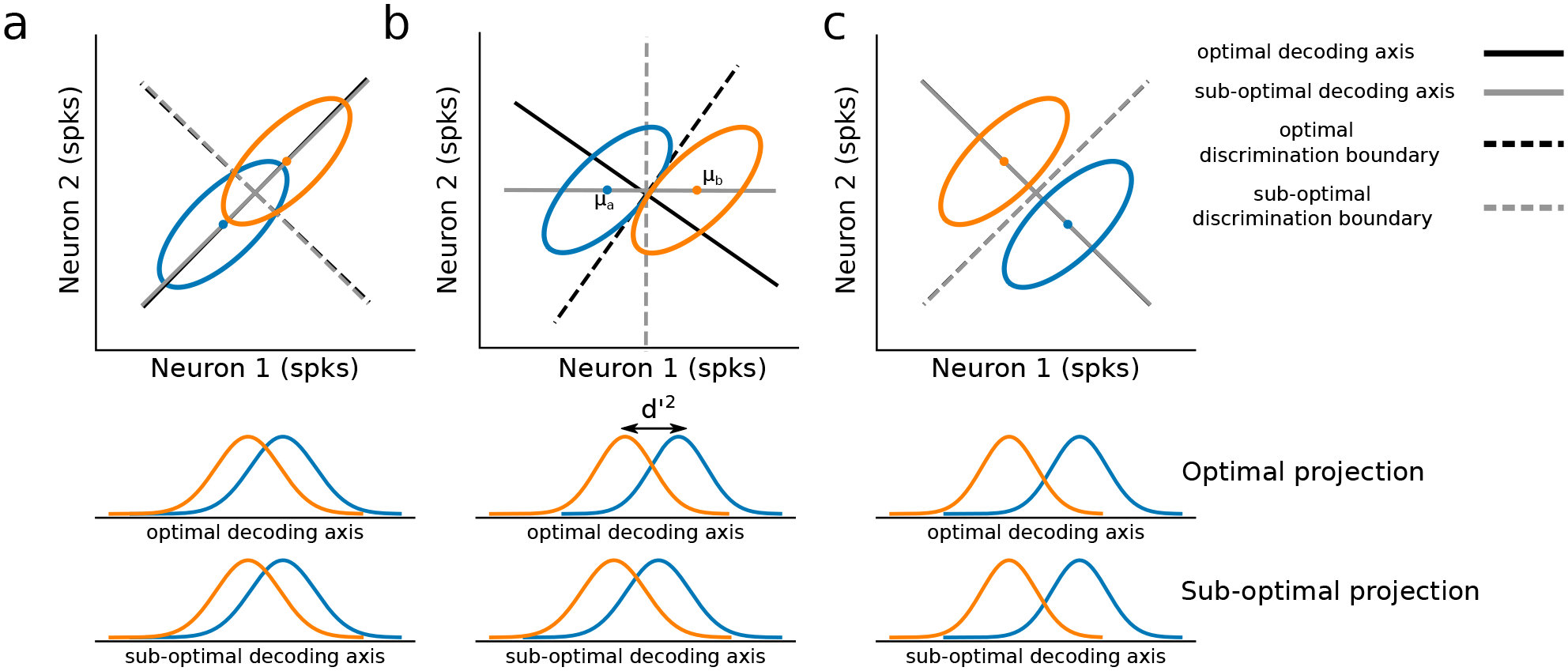

The method for decoding presented above depends only on the first-order statistics of the data - that is, only on the mean acitivty of each neuron in each condition. In some cases, however, this is not the optimal solution. Often, we can do a bit better than this if we consider not just the mean activity, but also the correlations between pairs of neurons. This is the approach taken by methods such as Linear Discriminant Analysis (LDA). To illustrate this idea, it is simpler to work with a two-dimensional dataset.

The example above illustrates that only under very special conditions (correlated activity either perfectly aligned with or perfectly orthogonal to \(\Delta\mu\)) is \(\Delta \mu\) the optimal linear decoding axis. Whenever this is not true, some benefit can be gained from factoring in correlations to the computation of the decoding axis, and a method such as LDA is preferred.

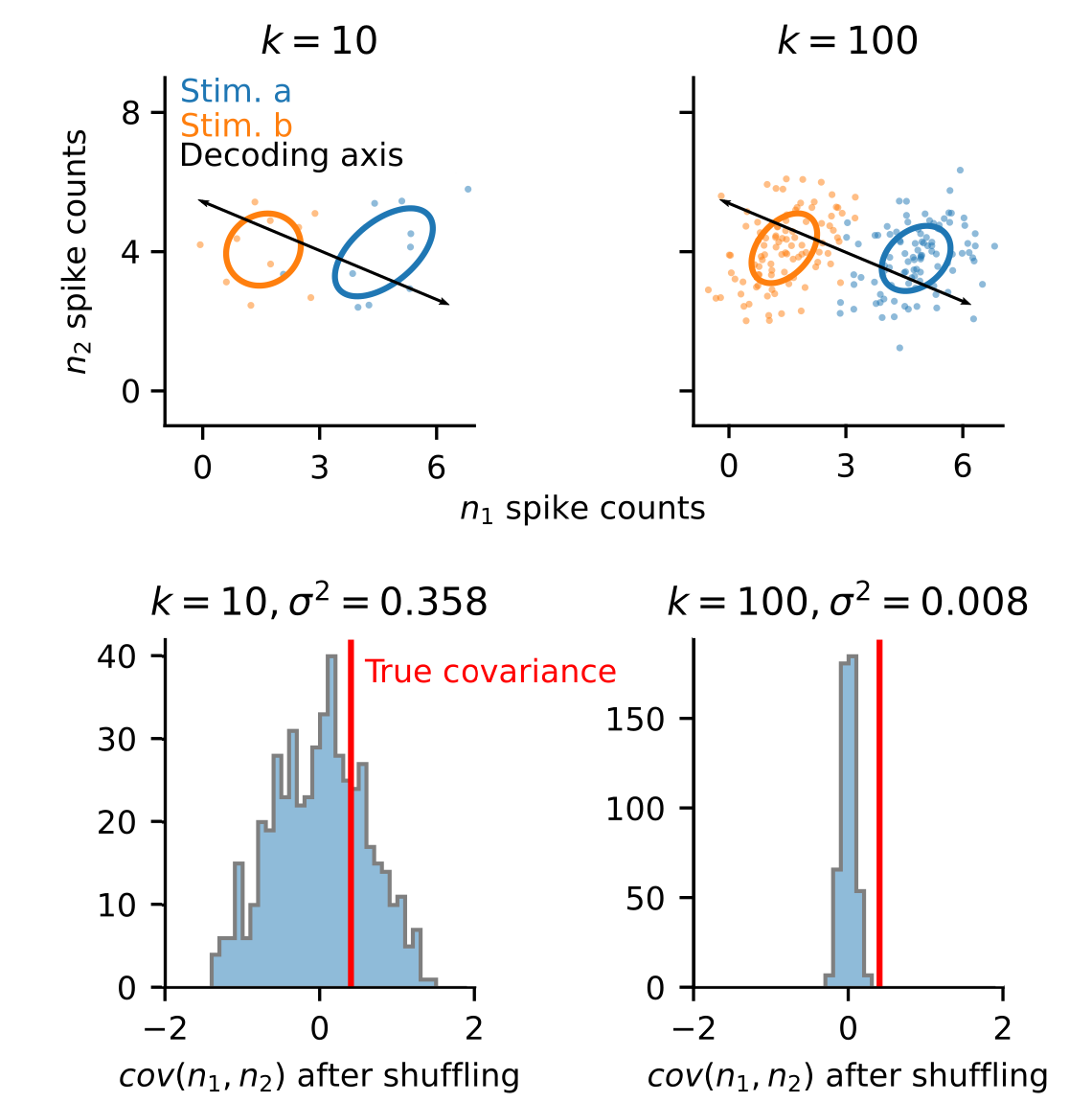

In practice, however, it is not so simple. Second-order statistics (correlations) require much more data to estimate accurately. Therefore, with limited data, estimates of neural covariance can often be innacurate. This can then lead to extreme overfitting and poor performance on heldout validation data when applying methods like LDA, as illustrated with the simulation below.

In most neuroscience experiments, we operate in the regime where we have too few trials to reliably estimate covariance (i.e., reality is closest to panel a, in the figure above). Thus, if we wish to apply optimal linear decoding techniques, like LDA, we need to develop new analytical approaches to first transform our data into a format that is suitable.

dDR

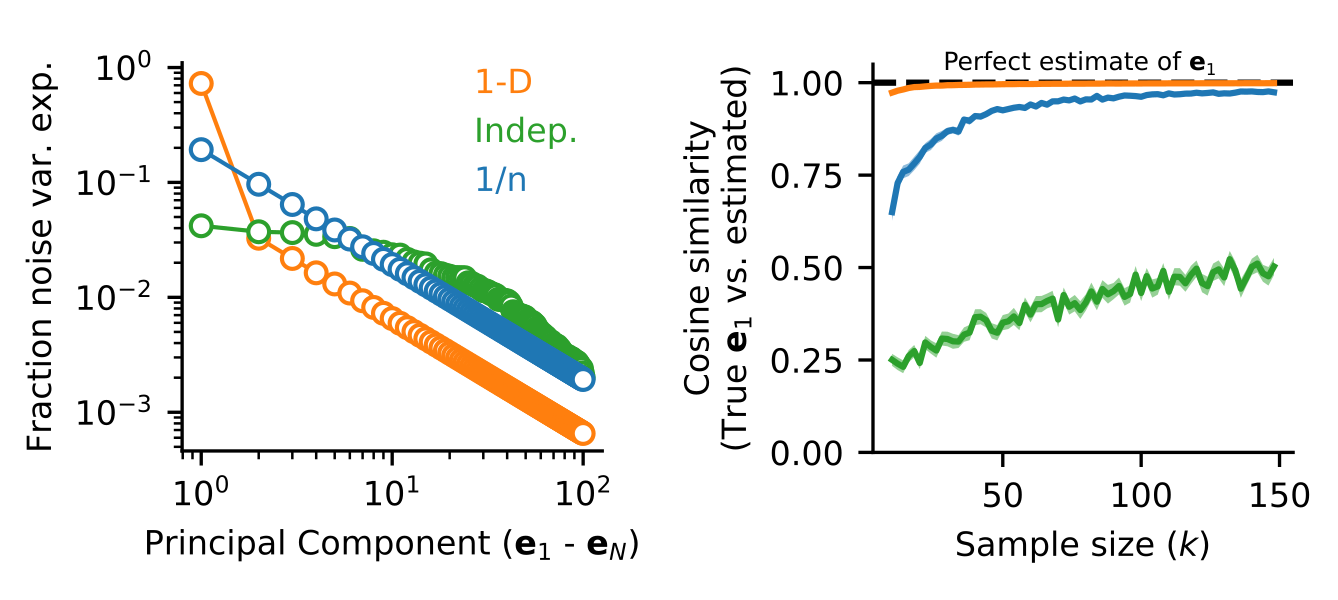

dDR takes advantage of the observation that covariance patterns in neural activity are typically low-dimensional. That is, if you were to perform PCA on a typical neural dataset, you would likely find that it has a relatively small number of significant dimensions. In other words, the covariance matrix can be described using only a handful of eigenvectors (\(e\)). Unlike single pairwise covariance coefficients, these high-variance eigenvectors can be estimated reliably even from very few trials, as demonstrated in the simulation below.

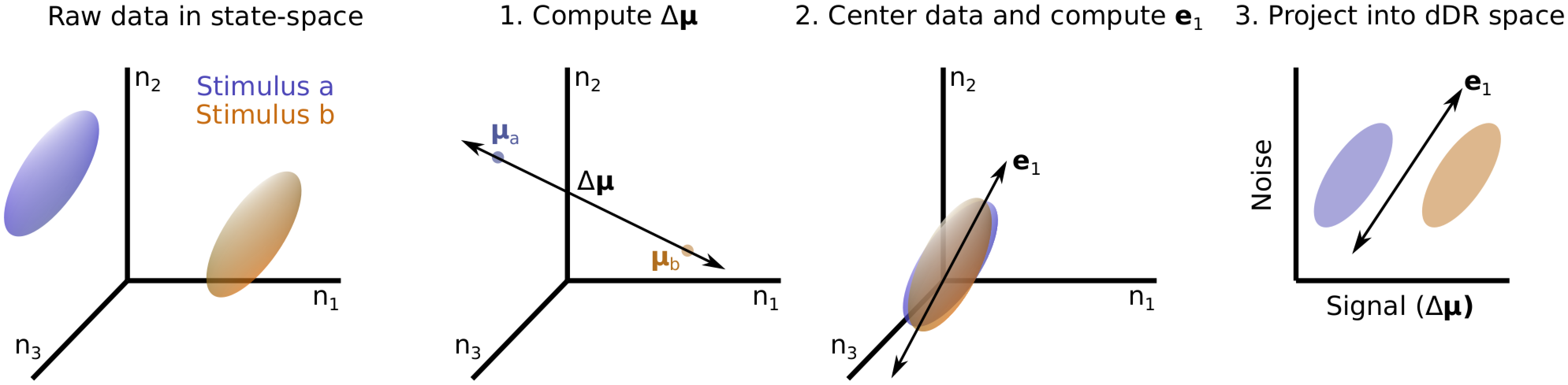

Thus, the first principal component of trial-trial covariance and the respective mean of neural activity under each condition define a unique hyperplane in the neural activity state space which can 1. Be estimated robustly and 2. Captures the two key features of population activity required for optimal linear decoding analysis. We call the projection into this space dDR. The full procedure is sketched out graphically below:

Once projected into the dDR space, we can perform our optimal linear decoding analysis of choice without as much concern about overfitting to noisy estimates of pairwise covariance. Thus, dDR can be viewed as a sort of regularization to be performed prior to estimating decoding accuracy. In the next two sections, we briefly highlight dDR’s application to simulated and real data.

Application to neural data

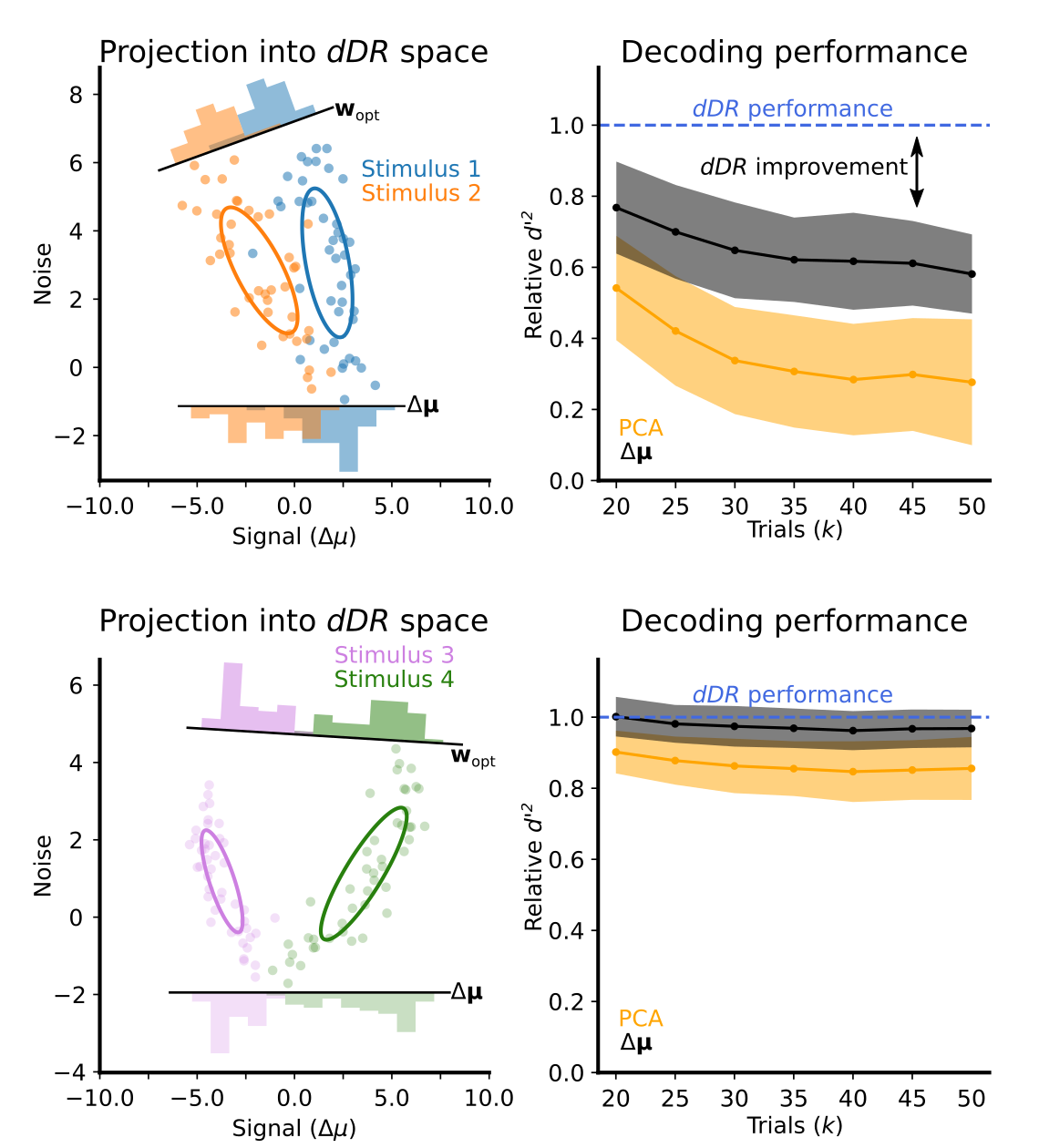

The sensitivity of decoding methods to overfitting is a known problem in neural data analysis. This is commonly dealt with by either using the \(\Delta \mu\) decoding approach discussed in section 1 or to reduce the dimensionality of the data using principal component analysis. While each of these approaches are valid, they often result in finding non-optimal solutions. To illustrate this, we applied all three methods (\(\Delta\mu\), PCA, and dDR) to the same dataset and compared their cross-validated decoding performance as a function of number of trials.

In all cases, we found that dDR performed as well as, or better than, both \(\Delta \mu\) and PCA approaches. We found that dDR was particulary beneficial in cases where 1. The two stimuli evoked similar mean activity in the neurons and 2. When the principal axis of covariance was not perfectly aligned with, or orthogonal to, the \(\Delta \mu\) axis, as discussed in section 2.